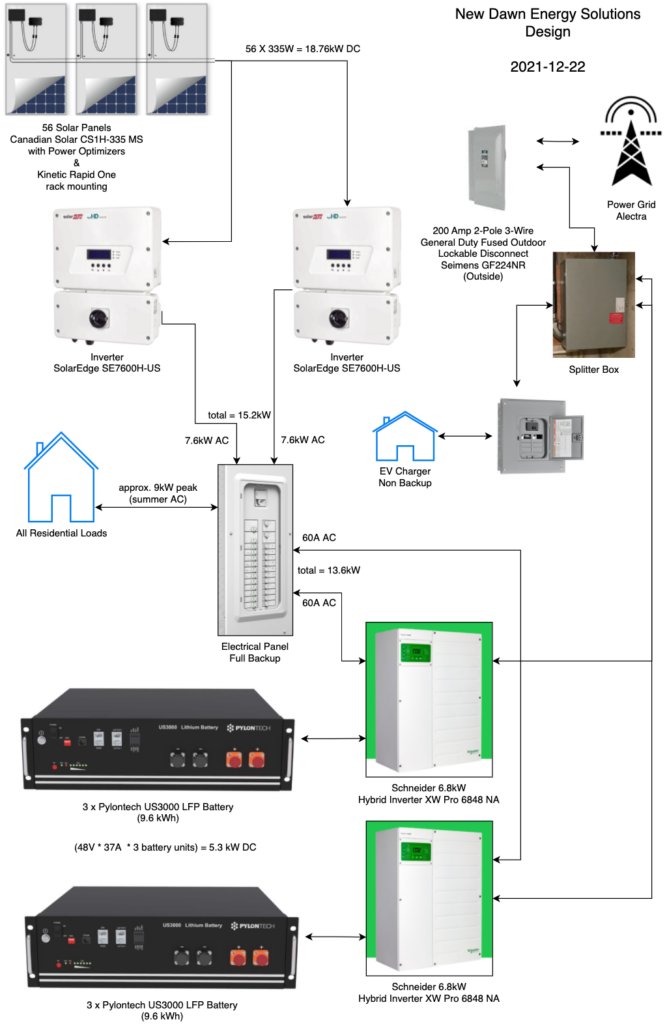

In my previous post, all major installations were completed. Since that time, the ESA inspection was completed and we validated our batteries so that we have confidence that they will last for more than a day in the worst case scenario (no sun). However at the time of this writing, we are still waiting for Alectra Utilities to switch out our old meter to a new one that is net-meter capable. Until this meter replacement occurs, every watt-hour (Wh) of energy we produce and send back to the grid, Alectra will charge us for it as if we are using that energy instead of producing it. Here is a summary of the timeline from panel installation:

- Solar panels installation completed on April 8th;

- ESA Inspection on April 12th;

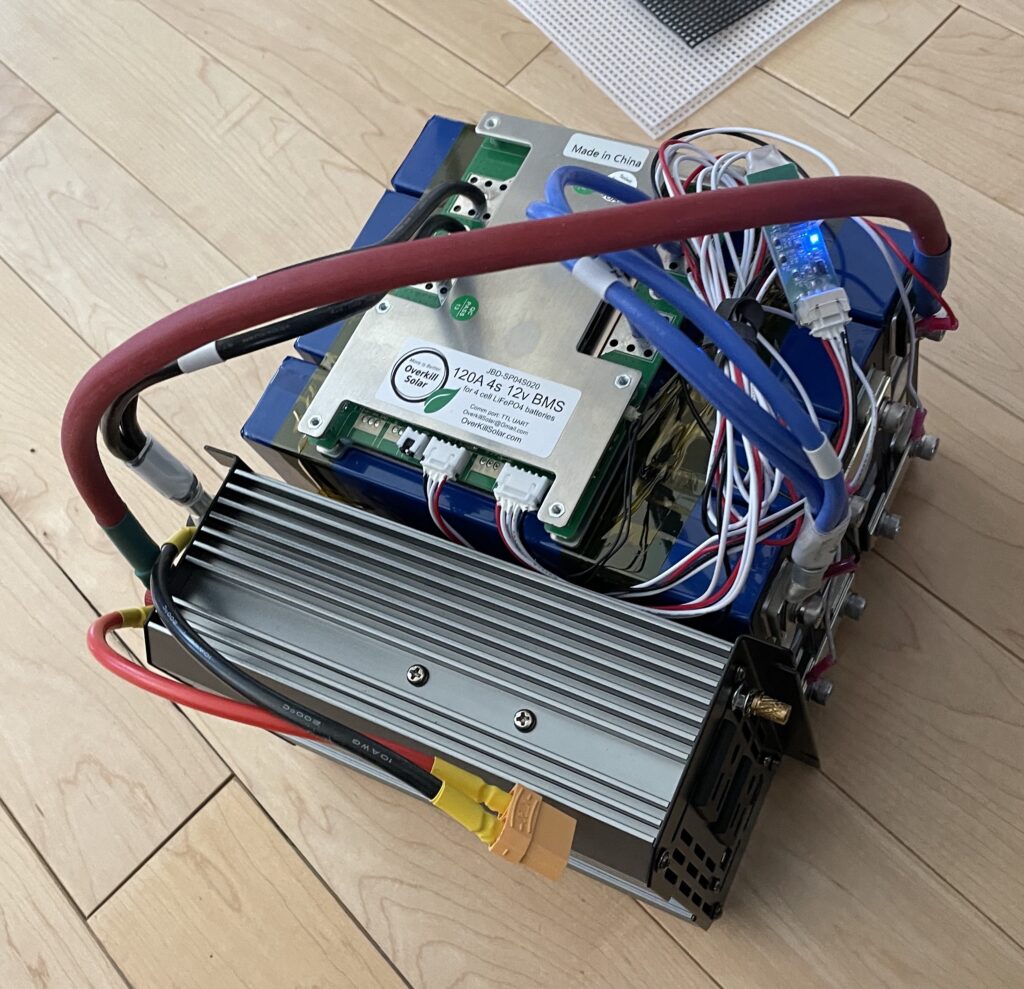

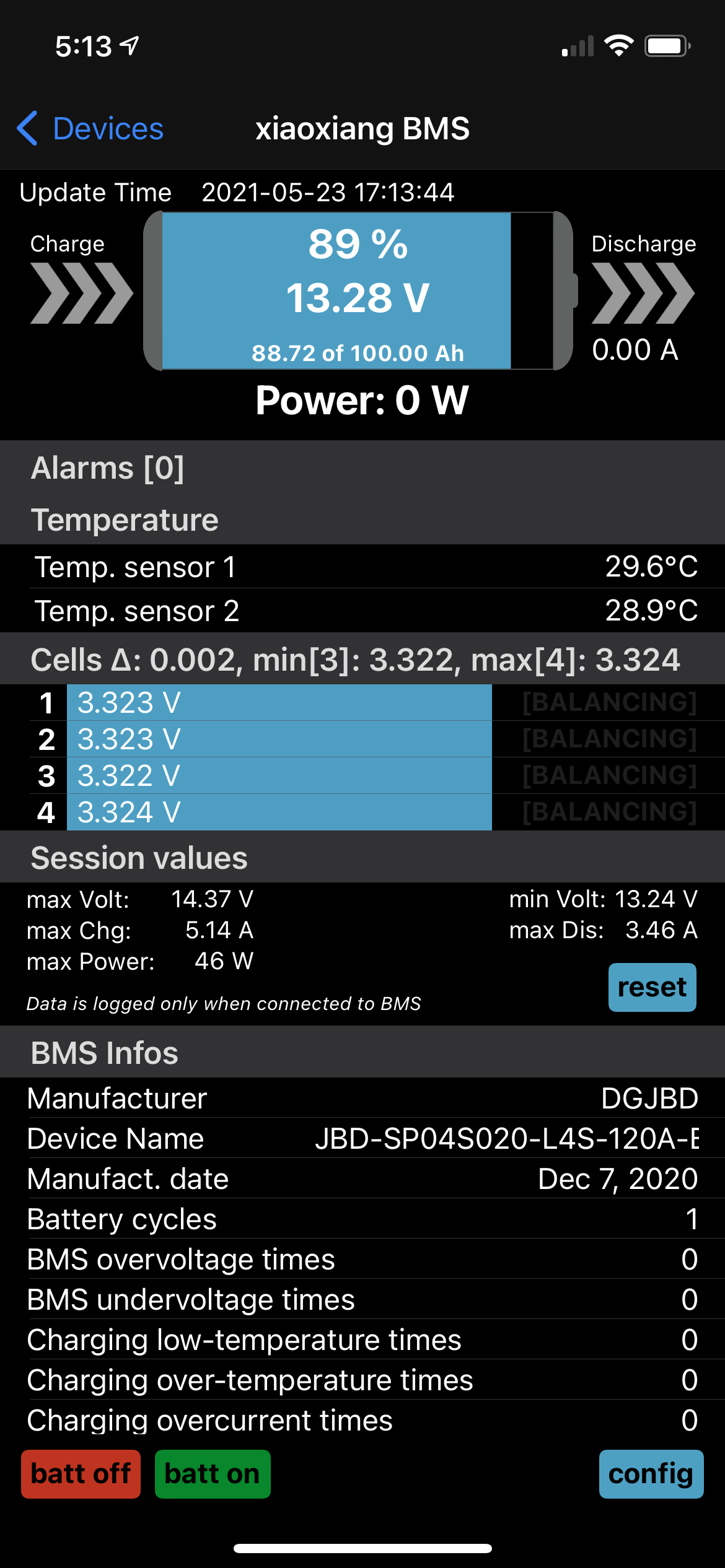

- New LiFePO4 batteries installed on April 18th;

- From April 18th onwards, we tested the system through a series of scenarios;

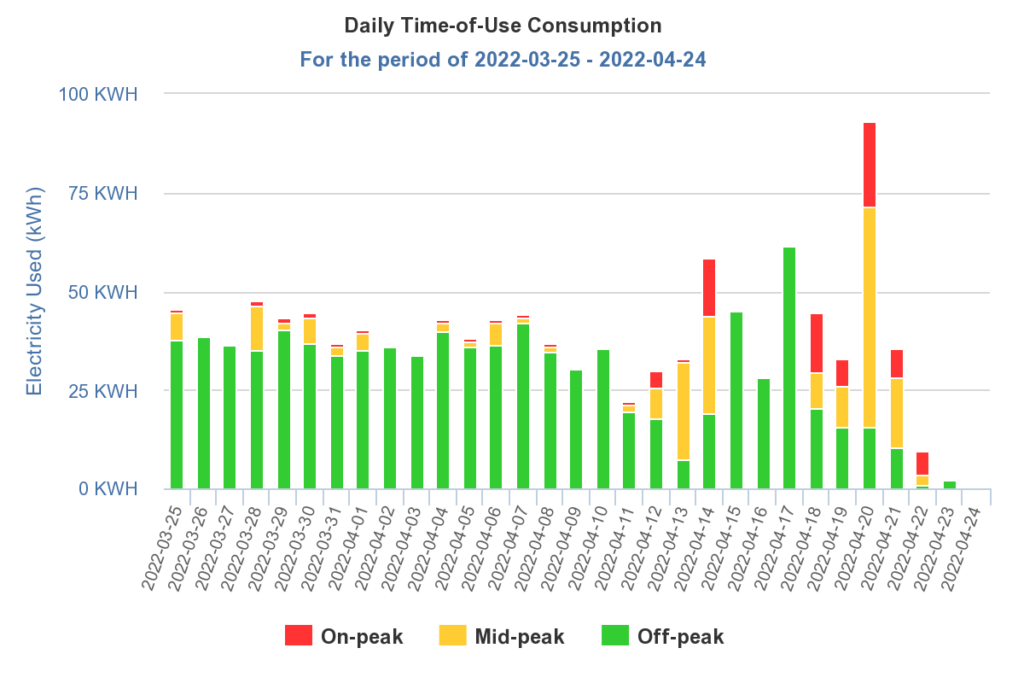

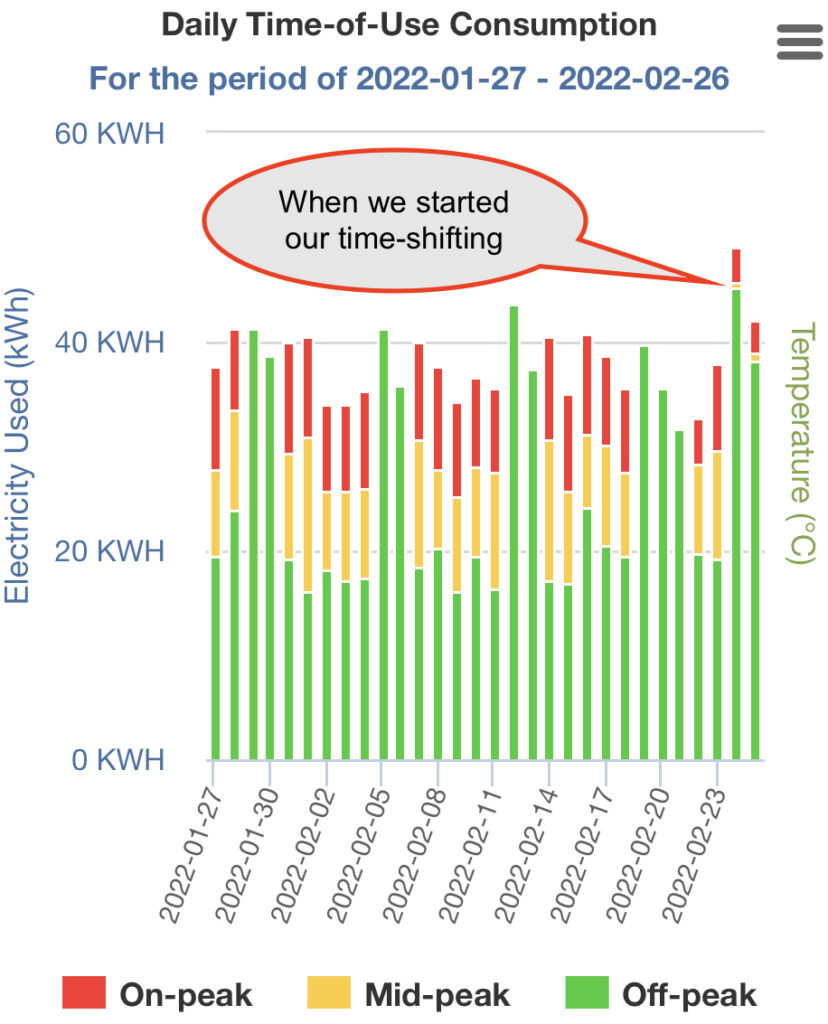

So prior to the ESA inspection on April 12th, we continue our on-peak time shifting. You can see that there has been very little on-peak usage (red indicator) before April 12th. Once the ESA inspection is completed, we turned on our solar panels for the first time.

The erratic “usage” indicated in the above chart after April 12th, is a direct result of excess solar energy being exported back to the grid. Since our net meter has yet to be installed, Alectra sees it as usage, and unfortunately I will have to pay for that generation, very ironic if you ask me.

Nevertheless, we gathered much data in the last couple of weeks. We tested the system for both on grid and off grid operations. We tested with washer and dryer loads. Today on a bright sunny day, I even tried our air conditioner when we are off grid. The air conditioner started without any issues and worked with just solar energy, impressive. I will try again at night when we only use the batteries.

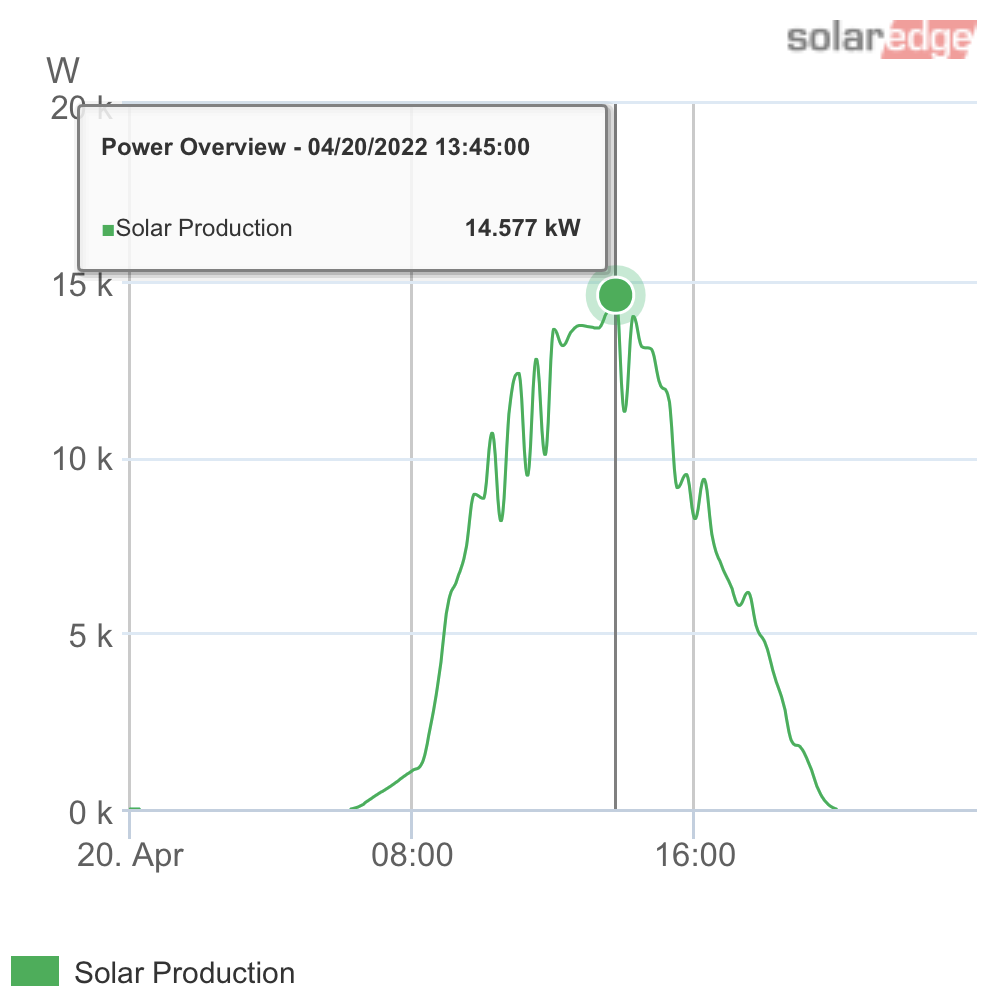

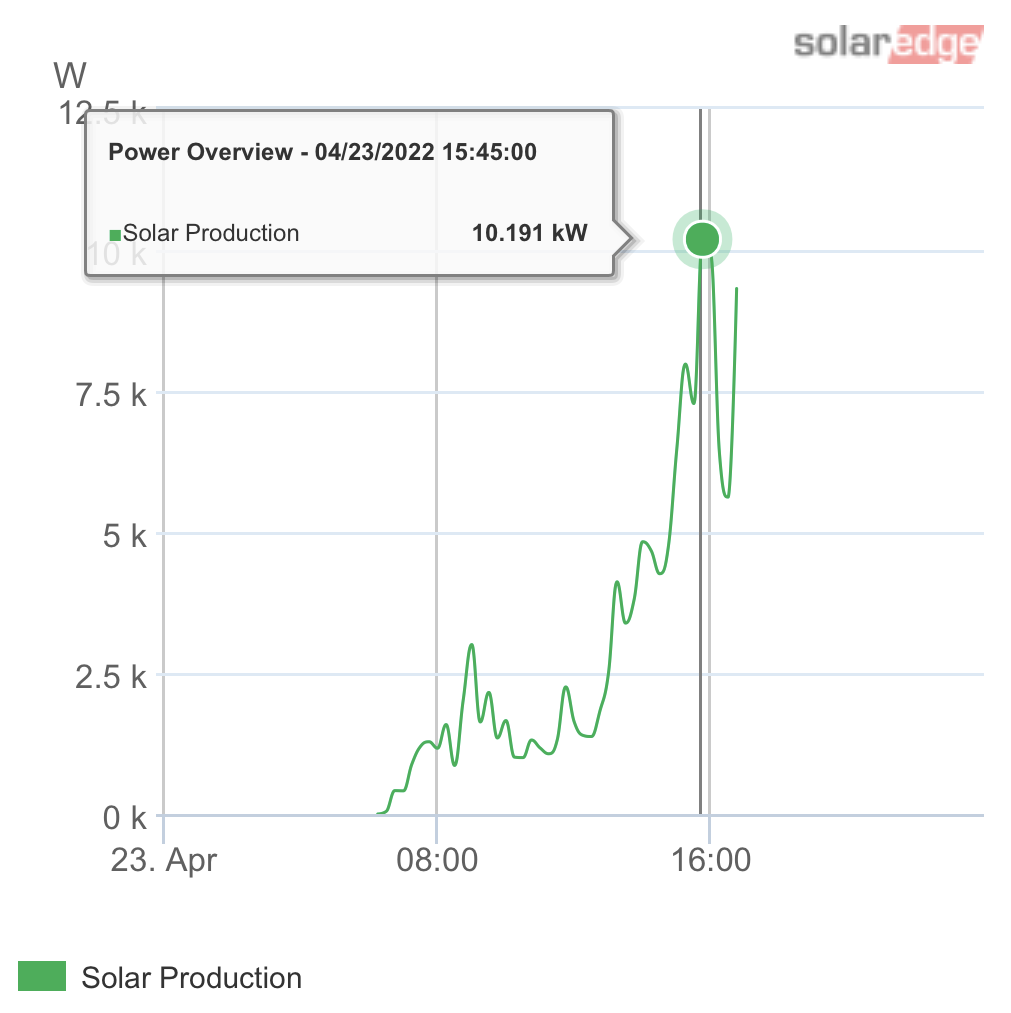

Let us take a look at our energy generation data that we collected so far. The information here is a surprise to us in a good way. The best way to show this is to provide the data for our best day performance to date.

On April 20th we had a beautiful sunny day. We generated 103.63 kWh of electricity, since the house could not use it all, we fed most of it back to the grid. This is an excellent run and really show what the panels are capable of. For comparison, our average daily use is between 30 to 40 kWh. This means our solar generation ability on a sunny day can easily cover 2.5 to 3 days. For those Tesla drivers out there, we can generate enough power to fill your “tank”.

Total generation: 32.53 kWh (not whole day)

Yesterday was a rainy and cloudy morning, and the power generation on average kept up with house load usage. We woke up with the batteries at about 50% charged and the system managed to gain around 10% of battery charge at 3pm. After 3pm, the sun started to come out and the batteries charged rapidly. It easily reached 87% state of charge, and I had to shut the solar generation down at around 5pm, otherwise the energy would have no place to go, which leads to another major dilemma for off grid operation.

During on grid operation, the grid can regulate and absorb the excess energy generated by our solar panels. This is a huge convenience, which until we have the net meter, we really cannot take advantage of.

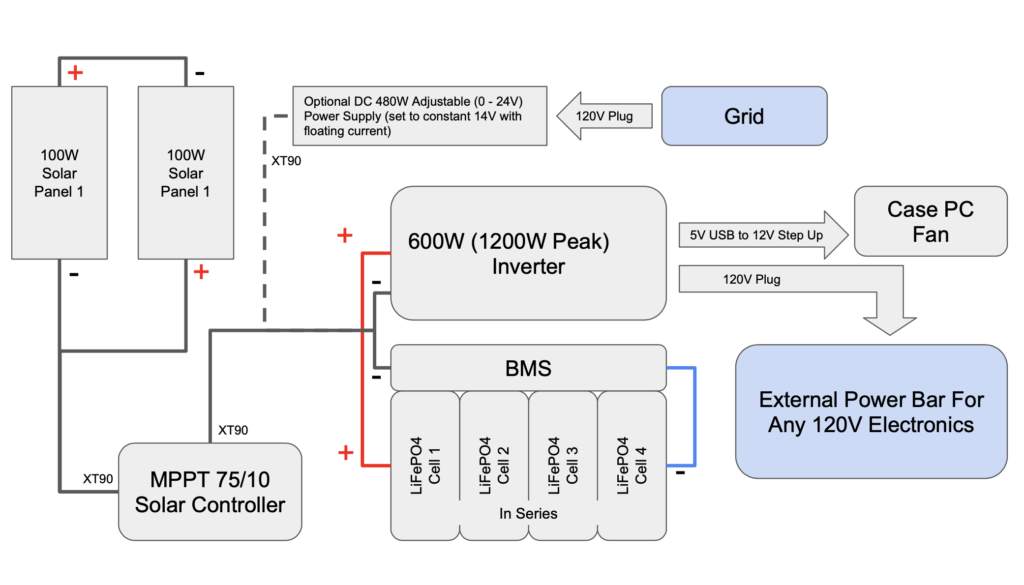

During off grid operation, we must use all the energy generated. Our supply must match demand and vice versa. This is where the batteries come in. They help to buffer or store the excess, and supplement any shortages. However, when the batteries are full and our usage cannot keep up with the generation, then the best option is to shutdown the solar, and shift our energy consumption to the batteries. Using the batteries will create more “empty” capacity, which we can later use to store more sun energy. I assumed, incorrectly, that this power regulation will be handled by the Schneider inverters. This is not the case, at least not fully. I am not going to go into details of Frequency Shift Power Control and other inverter deficiencies here, but suffice it to say that they are really not that smart. We will have to investigate on a more flexible power regulation mechanism for off grid operations in the future.

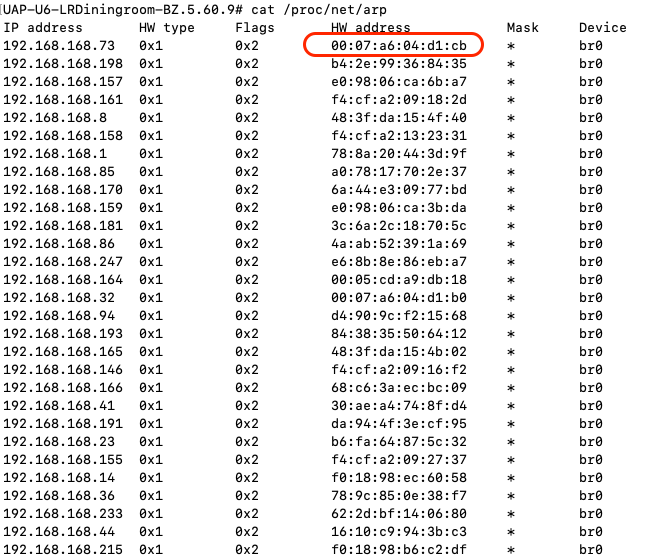

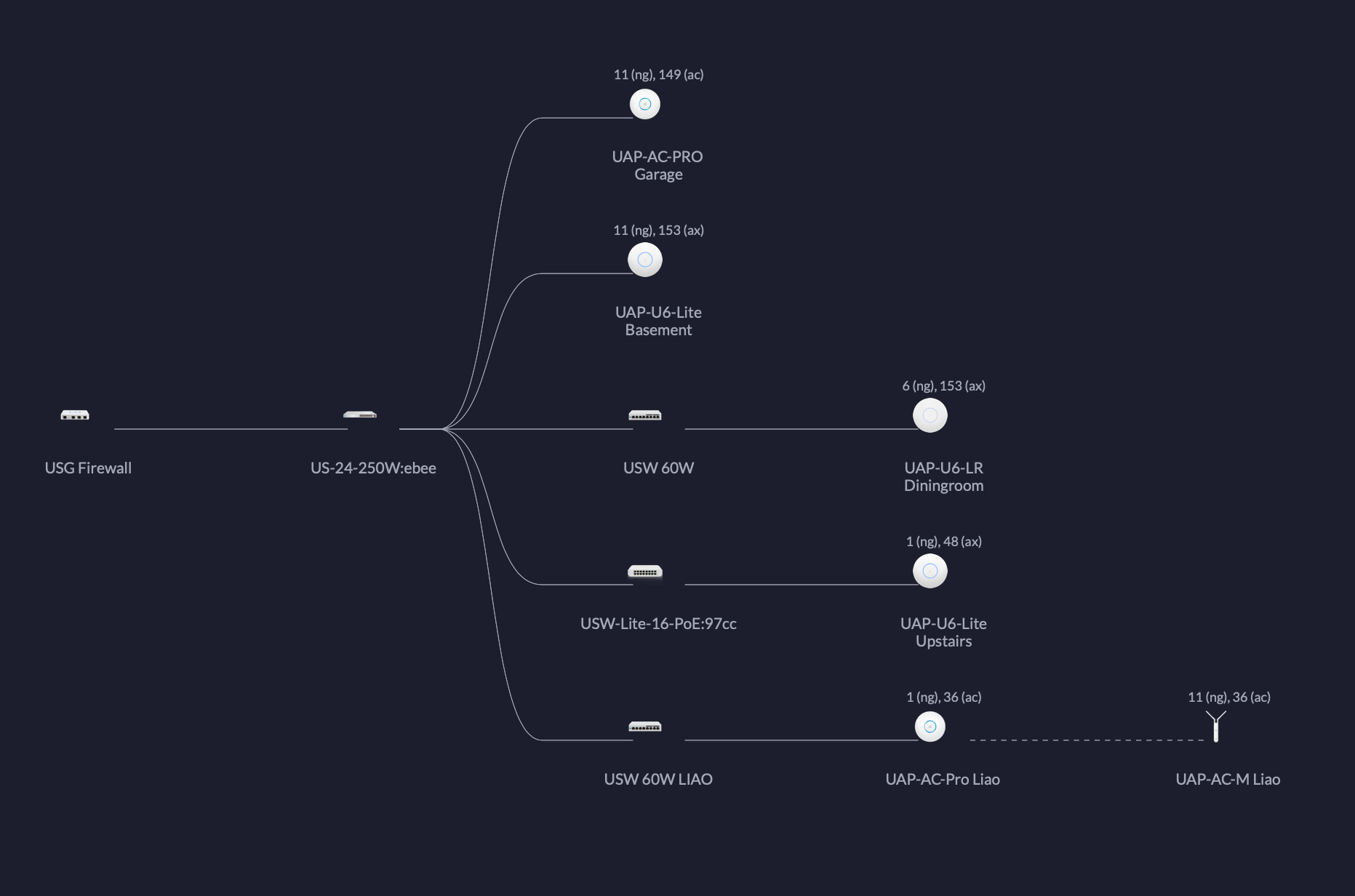

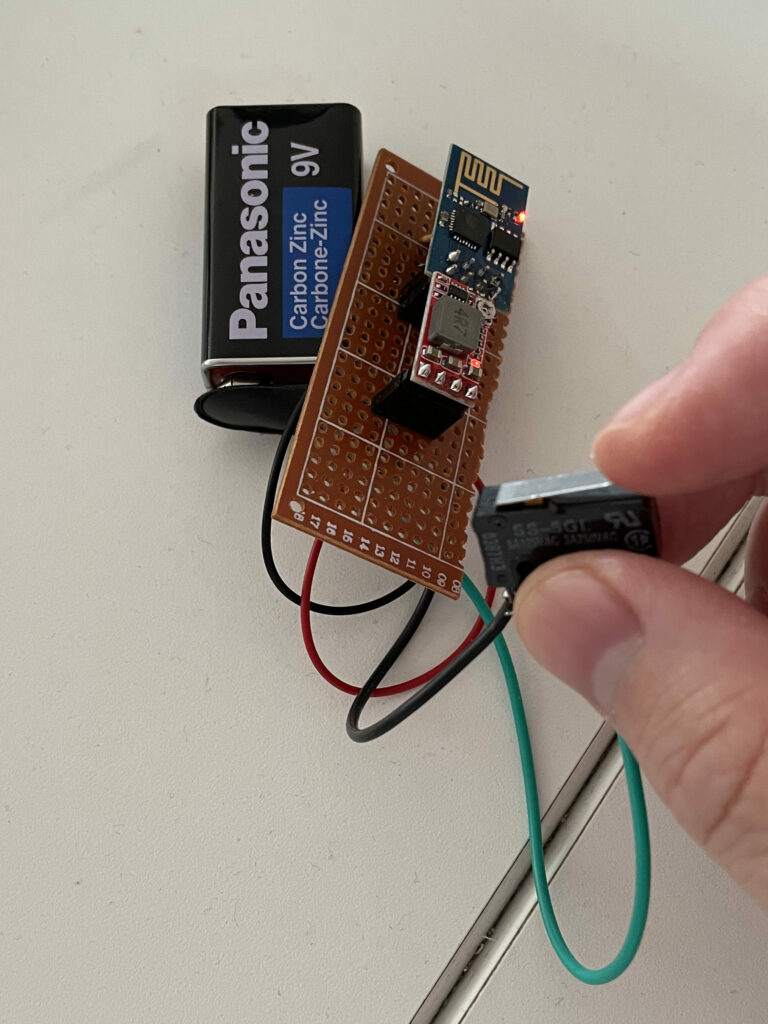

In the meantime, I have developed something myself that will monitor battery usage and solar power generation, so that I can determine when to turn on the solar and when to turn it off. Note that this is only for off grid operations. Once we have the net-meter, we can go back to on grid operations, and the convenience of the grid can act as the main regulator of power.

However, this is excellent experience as it teaches us some of the off grid challenges. There is no substitute for living through the experiences.

We hope the net-meter will arrive soon. Until then, we will challenge ourselves to see how many days we can stay off grid! You can already see our progress on the 23rd and the 24th of this month from the above Alectra utilization chart.

Cheers! Until the next update.