Approximately three years ago we created a holding a company in Ontario, Canada for the purpose of managing certain real estate investments. After some considerations, we have determined that this holding company is no longer required, so about a month ago, we decided to dissolve this company.

When we sent out an email requesting the dissolution of the company, we received the following response:

On the surface we thought this is excellent news, because we will be able to do this all online. However upon visiting Ontario.ca/BusinessRegistry we were immediately lost after the initial login.

It took many tries to discovered this successful navigation path, so I wanted to document this for other users and for myself in the future.

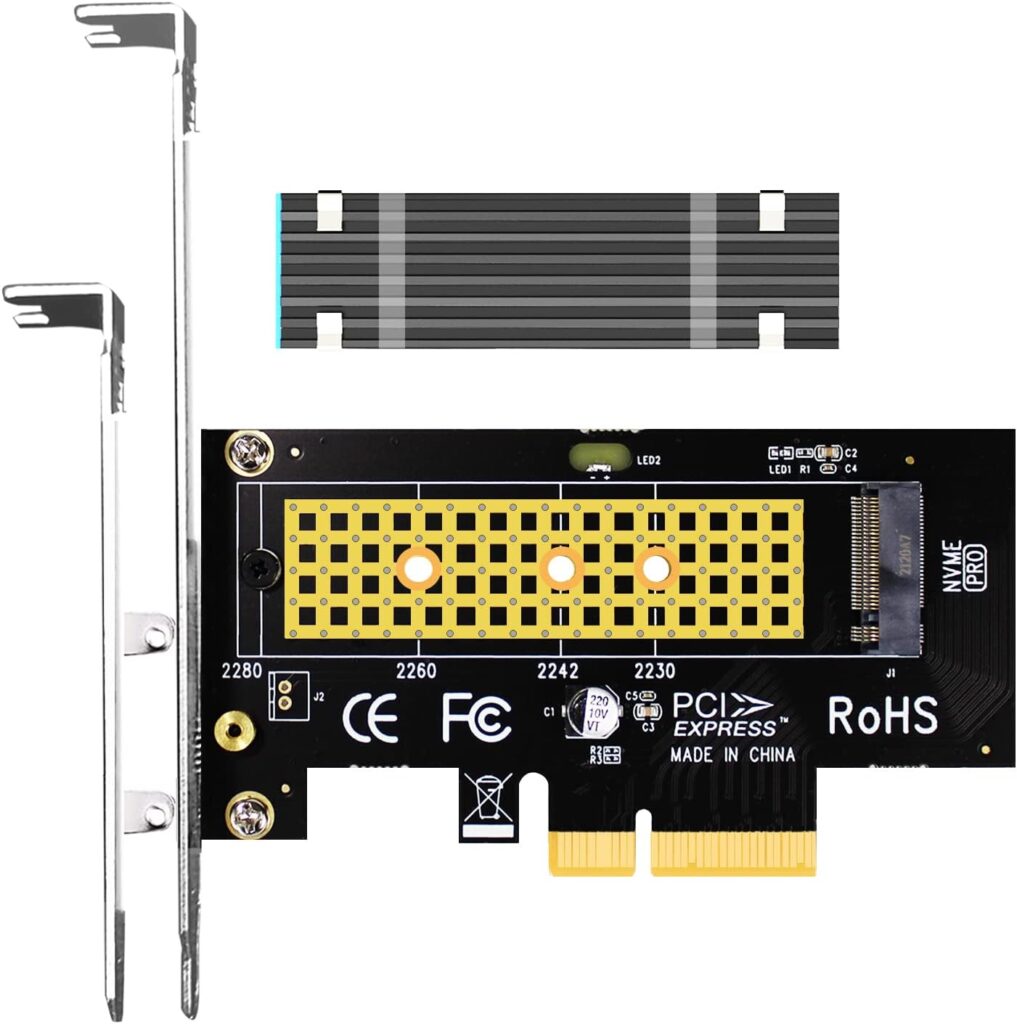

The menu options on the left is not very helpful (see below). The obvious one is Ontario business registry under Account help, but this only provides a false, old guide to a PDF form that you can download and fill-out but is discouraged and rarely used now. The correct selection is the mysterious Add a service item.

Once you are in the Add a service page, you can then select the Start now of the Ontario business registry process.

This will bring you to a different site, which you can use to select Make Changes, and then further down File Articles of Dissolution.

The entire experience feels like the website was put together by multiple contractors, and totally user unfriendly. Yet another government service experience.