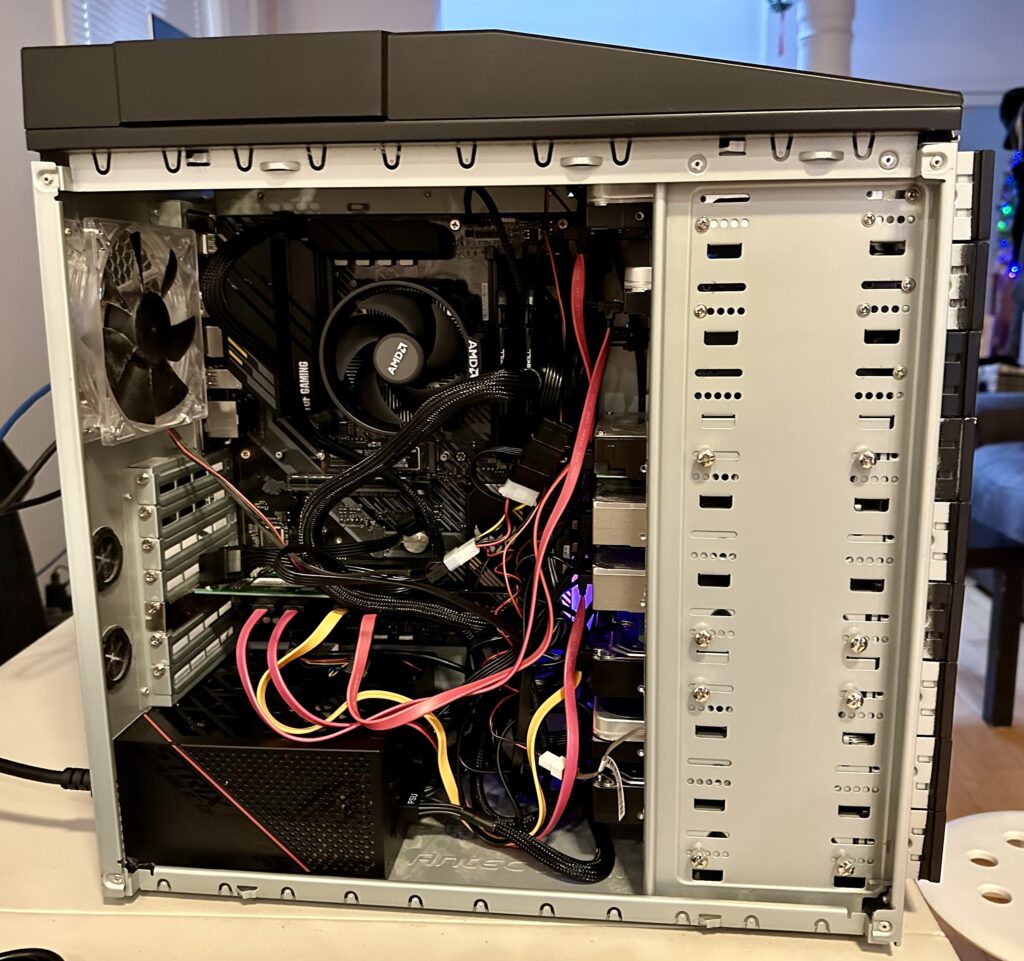

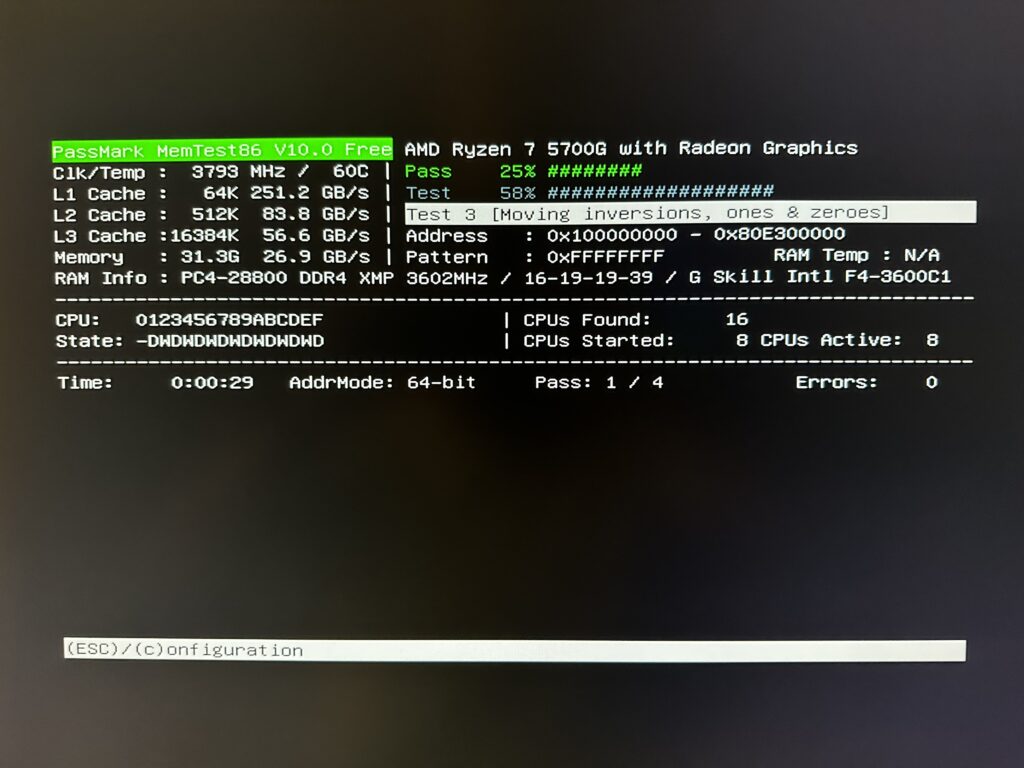

In the first part of this post, I talked about making sure all the new hardware that I recently purchased works. Yesterday, upgrading from Ubuntu 20.04 LTS to 22.04 LTS was super simple. Unfortunately, that was the end of the easy part.

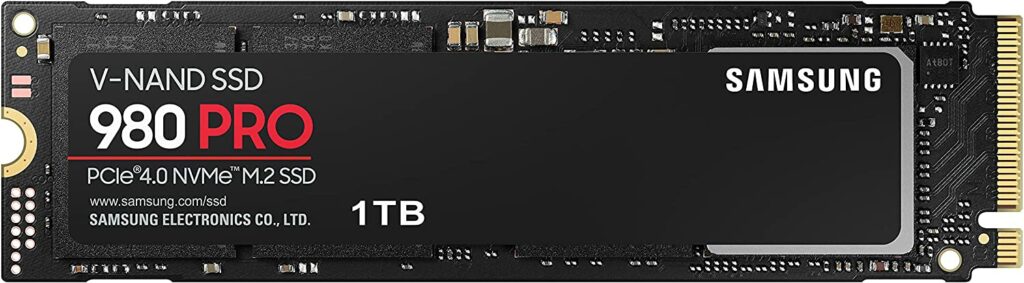

I thought I could just image by old boot drive and make a carbon copy of it on my new boot drive. My old boot drive is a simple SATA 512GB SSD, and my new boot drive is an NVMe M.2 1TB SSD plugged directly to the motherboard. The copying was pretty simple, but because the drives differ in size, I had to relayout the partition table with the new drive once the copy is completed. I did this with the parted command.

Unfortunately the new boot drive did not want to boot. At this point I had to do some research. The most helpful articles were:

- How to copy an Ubuntu install from one laptop to another

- How to Configure the GRUB2 Boot Loader’s Settings

Both of the above articles were an excellent refresher on how GRUB works. I have used GRUB since the beginning, but one gets super rusty when these types of tasks are only performed once every three or six years!

Instead of detailing what went wrong, I will just explain what I should have done. This way if I need it again in the future, it is here for my reference.

Step 1: Perform a backup of the old boot drive from a Live USB in shell mode. This is done on my server on a nightly basis. This method is clearly described on the Ubuntu Community Help Wiki.

Following this method I will end up with a compressed tar archive for my entire root directory, skipping some runtime and other unwanted directories.

Step 2: After installing a fresh install of the new Ubuntu LTS Server operating system on the new server and boot drive, I proceeded to backup the new boot with the same technique used in Step 1. I stored the backup of the new install on another external SSD drive that I have lying around. Also it is important that new boot drive partition layout of the new install contains a swap partition.

Step 3: I then restore the most recent backup (done in Step 1) of the old boot drive to the new boot drive. I then replaced the /boot/grub directory with the new contents from the new install which was backed up in Step 2. The new GRUB is already installed when we performed a brand new installation on the drive. We just want to make sure the boot partition matches the /boot/grub contents.

Step 4: We also need to fix up the /etc/fstab file because it contains references to drive devices from the old hardware. Paid special attention the main data partition and the swap partition. It should look something like this:

# /etc/fstab: static file system information.

#

# Use 'blkid' to print the universally unique identifier for a

# device; this may be used with UUID= as a more robust way to name devices

# that works even if disks are added and removed. See fstab(5).

#

# <file system> <mount point> <type> <options> <dump> <pass>

# / was on /dev/nvme1n1p2 during curtin installation

UUID=fc939be4-5292-4252-8120-7ef59b177e5b / ext4 defaults 0 1

# /boot/efi was on /dev/nvme0n1p1 during curtin installation

UUID=5187-A8C6 /boot/efi vfat defaults 0 1

# Swap partition

UUID=512d611e-6944-4a57-9748-ea68e9ec3fad none swap sw 0 0

# /dev/mapper/airvideovg2-airvideo /mnt/airvideo ext4 rw,noatime 0 0

UUID=9e78425c-c1f3-4285-9fa1-96cac9114c55 /mnt/airvideo ext4 rw,noatime 0 0

Noticed that I also added the LVM logical volume for /mnt/airvideo, which is my RAID-1 array. The UUID can be obtained by the blkid command. Below is a sample output:

% blkid

/dev/sdf1: UUID="60024298-9915-3ad8-ae6c-ed7adc98ee62" UUID_SUB="fe08d23c-8e11-e02b-63f9-1bb806046db7" LABEL="avs:4" TYPE="linux_raid_member" PARTLABEL="primary" PARTUUID="552bdff7-182f-40f0-a378-844fdb549f07"

/dev/nvme0n1p1: UUID="r2rLMD-BEnc-wcza-yvro-chkB-1vB6-6Jtzgz" TYPE="LVM2_member" PARTLABEL="primary" PARTUUID="6c85af69-19a0-4720-9588-808bc0d818f7"

/dev/sdd1: UUID="34c6a19f-98ea-0188-bb3f-a5f5c3be238d" UUID_SUB="4174d106-cae4-d934-3ed4-5057531acb3c" LABEL="avs:3" TYPE="linux_raid_member" PARTLABEL="primary" PARTUUID="2fc4e9ad-be4b-48aa-8115-f32472e61005"

/dev/sdb1: UUID="ac438ac6-344a-656b-387f-017036b0fafa" UUID_SUB="0924dc67-cd3f-dec5-1814-ab46ebdf2fbe" LABEL="avs:1" TYPE="linux_raid_member" PARTUUID="29e7cfce-9e7b-4067-a0ca-453b39e0bd3d"

/dev/md4: UUID="gjbtdL-homY-wyRG-rUBw-lFgm-t0vZ-Gi8gSz" TYPE="LVM2_member"

/dev/md2: UUID="0Nky5e-52t6-b1uZ-GAIl-4Ior-XWTz-wFpHh1" TYPE="LVM2_member"

/dev/sdi1: UUID="5b483ac2-5b7f-4951-84b2-08adc602f705" BLOCK_SIZE="4096" TYPE="ext4" PARTLABEL="data" PARTUUID="e0515517-9fbb-4d8a-88ad-674622f20e00"

/dev/sdg1: UUID="3d1afb64-8785-74e6-f9be-b68600eebdd5" UUID_SUB="c146cd05-8ee8-5804-b921-6d87cdd4a092" LABEL="avs:2" TYPE="linux_raid_member" PARTLABEL="lvm" PARTUUID="2f25ec17-83c4-4c0b-8653-600283d58109"

/dev/sde1: UUID="34c6a19f-98ea-0188-bb3f-a5f5c3be238d" UUID_SUB="8aabfe5b-af16-6e07-17c2-3f3ceb1514e3" LABEL="avs:3" TYPE="linux_raid_member" PARTLABEL="primary" PARTUUID="2fc4e9ad-be4b-48aa-8115-f32472e61005"

/dev/sdc1: UUID="ac438ac6-344a-656b-387f-017036b0fafa" UUID_SUB="c188f680-01a8-d5b2-f8bc-9f1cc1fc3598" LABEL="avs:1" TYPE="linux_raid_member" PARTUUID="29e7cfce-9e7b-4067-a0ca-453b39e0bd3d"

/dev/nvme1n1p2: UUID="fc939be4-5292-4252-8120-7ef59b177e5b" BLOCK_SIZE="4096" TYPE="ext4" PARTUUID="912e805d-fe68-48f8-b845-9bba0e3e8c78"

/dev/nvme1n1p3: UUID="512d611e-6944-4a57-9748-ea68e9ec3fad" TYPE="swap" PARTLABEL="swap" PARTUUID="04ac46ff-74f3-499a-814d-32082f6596d2"

/dev/nvme1n1p1: UUID="5187-A8C6" BLOCK_SIZE="512" TYPE="vfat" PARTUUID="fe91a6b2-9cd3-46af-813a-b053a181af52"

/dev/sda1: UUID="3d1afb64-8785-74e6-f9be-b68600eebdd5" UUID_SUB="87fe80a1-4a79-67f3-273e-949e577dd5ee" LABEL="avs:2" TYPE="linux_raid_member" PARTUUID="c8dce45e-5134-4957-aee9-769fa9d11d1f"

/dev/md3: UUID="XEJI0m-PEmZ-VFiI-o4h0-bnQc-Y3Be-3QHB9n" TYPE="LVM2_member"

/dev/md1: UUID="usz0sA-yO01-tlPL-12j2-2C5r-Ukhc-9RLCaX" TYPE="LVM2_member"

/dev/mapper/airvideovg2-airvideo: UUID="9e78425c-c1f3-4285-9fa1-96cac9114c55" BLOCK_SIZE="4096" TYPE="ext4"

/dev/sdh1: UUID="60024298-9915-3ad8-ae6c-ed7adc98ee62" UUID_SUB="a1291844-6587-78b0-fcd1-65bc367068e5" LABEL="avs:4" TYPE="linux_raid_member" PARTLABEL="primary" PARTUUID="ed0274b9-21dc-49bf-bdda-566b2727ddc2"

Step 4B (Potentially): If the system boots in the “grub>” prompt, then we will have persuade grub to manually boot by providing the following at the prompt:

grub> set root=(hd9,gpt2)

grub> linux /boot/vmlinuz root=/dev/nvme1n1p2

grub> initrd /boot/initrd.img

grub> bootTo find the root value on the first line, you have use the ls command which is explained in this article. The root parameter on the linux line references the partition which the root directory is mounted. In my case, it was /dev/nvme1n1p2.

After I rebooted, I reinstalled GRUB with the following as super user:

grub-install /dev/nvme1n1It may also be required to update our initramfs using:

update-initramfs -c -k allStep 5: At this point the system should reboot and all of the old server’s content should now be on the old hardware. Unfortunately we will need to fix the network interface.

First obtain the MAC address of the network interface using:

% sudo lshw -C network | grep serial

serial: 04:42:1a:05:d3:c4And then we will have to edit the /etc/netplan/00-installer-config.yaml file.

% cat /etc/netplan/00-installer-config.yaml

# This is the network config written by 'subiquity'

network:

ethernets:

enp6s0:

dhcp4: true

match:

macaddress: 04:42:1a:05:d3:c4

set-name: enp6s0

version: 2Ensuring the MAC address matches from lshw and that the name is the same as the old system. The name in this example is enp6s0. We then need to execute the following commands to generate the interface.

netplan generate

netplan applyWe need to ensure the name matches because many services on the server have configurations that references the interface name, such as:

- Configurations in

/var/network/interfaces - Samba (SMB) (

/etc/samba/smb.conf) - Pihole (

/etc/pihole/setupVars.conf) - Homebridge (

/var/lib/homebridge/config.json)

Step 6: Fix the router provisioning DHCP IP addresses so that the new server has the same fixed IP address as the old server. This is important because there may be firewall rules referencing this IP address directly. The hostname should have been automatically restored when we restored the partition in Step 3.

Step 7: Our final step is to test the various services and ensure they are working properly. These include:

- Our web site lufamily.ca

- Homebridge

- Plex

- Pihole (DNS server)

- SMB (File sharing)

Finally the new system is completed!